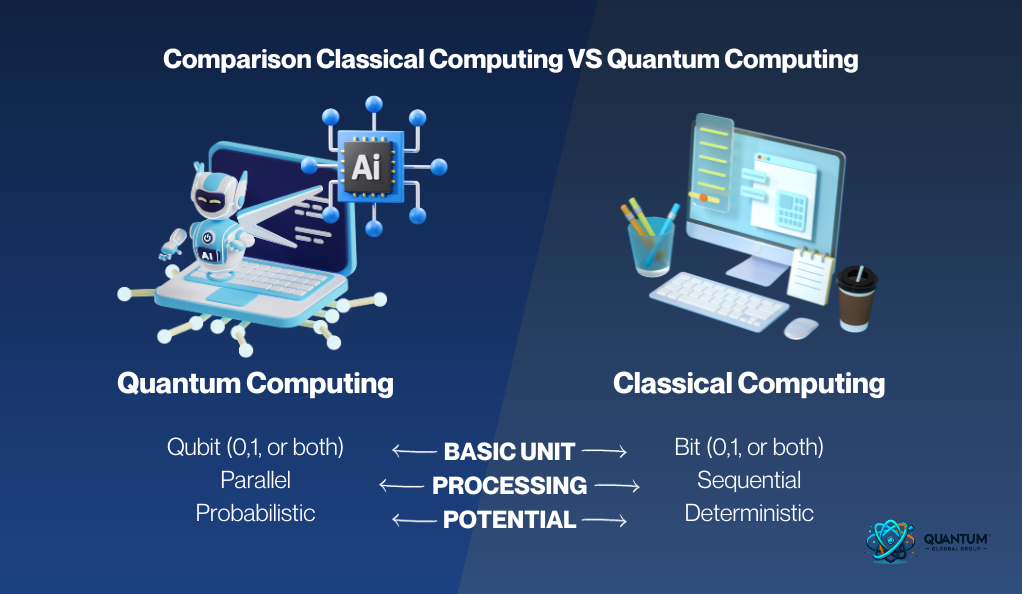

Quantum computing has been heralded as the harbinger of a new computational paradigm, promising unprecedented speed and efficiency in solving problems that baffle classical computers. However, while the quantum realm invites curiosity and excitement, an intriguing question arises: what practical alternatives can effectively address complex computational challenges without diving into the intricate and often nebulous world of quantum mechanics? In light of the challenges associated with building scalable quantum systems, exploring alternative technologies becomes not only prudent but essential.

One of the most noteworthy alternatives to quantum computing is the burgeoning field of classical machine learning algorithms. Classical machine learning, distinguished from its quantum counterpart, leverages statistical techniques and computational power to analyze large datasets, identify patterns, and make predictions. With the advent of sophisticated algorithms such as deep learning and reinforcement learning, contemporary classical computing has significantly enhanced its capabilities, enabling it to solve increasingly complex problems across various domains, from finance to healthcare.

Yet, could machine learning alone suffice in addressing the vast computational demands expected in the coming decades? The challenge lies in the nature of problems that necessitate not just speed, but also innovative solutions that may elude classical approaches due to inherent limitations in processing power and algorithmic efficiency. For instance, optimization problems in logistics or pharmaceuticals require not only the ability to compute rapidly but also to navigate high-dimensional spaces effectively, presenting a formidable challenge for traditional systems.

Enter the realm of High Performance Computing (HPC). HPC amalgamates the processing power of numerous classical systems to tackle large-scale computations. With its robust architectures, parallel processing capabilities, and advanced algorithms, HPC can simulate complex physical systems, model climate patterns, and conduct intricate data analyses with remarkable efficiency. The parallel nature of HPC makes it an attractive alternative, as it sidesteps many of the restrictions posed by quantum computing while still achieving impressive computational results.

Nevertheless, could HPC sustain its trajectory as a suitable alternative amidst the rapid pace of technological evolution? Despite its strengths, HPC requires substantial investment in hardware and infrastructure, making it a less accessible option for some organizations. Additionally, as computational problems grow increasingly complex, the efficiency of HPC systems may face limitations due to communication overhead and data transfer bottlenecks.

Considering accessibility and flexibility, advancements in Cloud Computing emerge as another viable alternative. The cloud mantra of “compute on demand” allows users to harness vast computational resources without the substantial capital expenditures tied to traditional computing infrastructures. With the exponential growth in cloud capabilities, including serverless computing and containerization, organizations now possess the ability to scale their computational resources according to immediate requirements. This flexibility poses a significant advantage, particularly in domains that experience fluctuating workloads, such as web services or seasonal analytics.

Moreover, the intertwining rise of edge computing introduces a compelling dimension to the discourse on alternatives to quantum computing. By processing data closer to its source, edge computing mitigates latency issues associated with conventional cloud approaches. This shift bears significant implications for real-time data analysis in the Internet of Things (IoT) sphere, where timely computational insights can influence industrial automation, smart cities, and even autonomous vehicles. Yet, the challenge persists: as devices proliferate and data volumes soar, how do we ensure that the computational architecture remains resilient and adaptive?

Furthermore, natural language processing (NLP) tools have gained traction in recent years, streamlining tasks that were once resource-intensive and time-consuming. With the emergence of transformer models like BERT and GPT, NLP algorithms have exhibited remarkable prowess in understanding and interpreting human language, rendering them indispensable in fields such as customer service, content creation, and legal analysis. However, as the demand for NLP systems escalates in tandem with global communication and digital interaction, the scalability of classical NLP tools may soon face scrutiny. Can they continue to evolve rapidly enough to meet the latent needs of nuanced communication and complex human interaction?

Exploring the world of specialized hardware can also pave the way for practical alternatives. Graphics Processing Units (GPUs) and Application-Specific Integrated Circuits (ASICs) have demonstrated unparalleled efficiency in specific tasks such as rendering graphics or executing complex numerical calculations. Their parallel architecture allows for substantial speed-up over traditional CPUs when engaging in massive data processing jobs. However, does this specialization bind them to certain problems, thus limiting their adaptability across various industries?

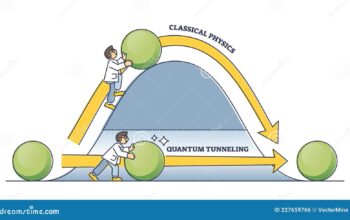

Fostering innovation through hybrid approaches exemplifies an enterprising pathway to navigate the computational landscape. By integrating classical computing paradigms with emerging technologies, such as quantum annealers or neuromorphic computing, researchers can synergistically harness the strengths of each discipline to devise inventive solutions for complex problems. However, this hybridization necessitates a rethinking of methodological frameworks and may provoke pushback from purists in either camp. How do we reconcile the traditionally siloed fields of computing to foster collaboration and innovation?

In conclusion, while quantum computing incites fervent interest as a revolutionary technological stride, the quest for practical alternatives unveils a rich tapestry of possibilities that could very well address the complexities of contemporary computational demands. Classical machine learning, HPC, cloud and edge computing, natural language processing, specialized hardware, and hybrid methodologies each present their own advantages and challenges. The path ahead requires a holistic examination of these alternatives, fostering adaptability and innovation that will ultimately pave the way for a future wherein we not only meet but exceed the computational demands of an ever-evolving world.