How often do we consider the precision with which a simple thermometer or a complex measuring apparatus registers its data? Calibration serves as a pivotal process that ensures accuracy in measurement, leading to reliability in scientific endeavors and industrial applications. This article explores the multifaceted domain of measuring instrument calibration, addressing methodologies, challenges, and implications.

Measuring instruments are omnipresent in modern society, ranging from the alcohol breathalyzers in law enforcement to the advanced spectrometers used in laboratories. But how do we ascertain their accuracy? The crux of this inquiry revolves around calibration, a meticulous procedure aimed at aligning the measurements of an instrument with a known standard.

At its essence, calibration is the act of adjusting an instrument to bring its readings into alignment with a standard or reference point. This can involve various forms of comparison, most commonly against national or international standards. Instruments must be regularly calibrated to ensure optimal performance, as various factors such as wear, environmental conditions, and instrumental drift can affect their precision.

The calibration process typically initiates with a pre-calibration inspection. During this phase, the instrument is thoroughly examined for any visible signs of damage or wear, which could compromise its functionality. This step is critical as it helps identify issues that may require rectification before the actual calibration is performed.

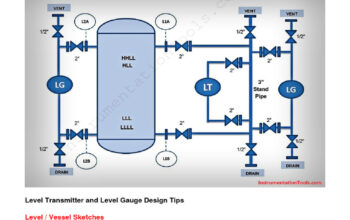

Subsequently, the instrument undergoes a series of tests against known standards. During this phase, the instrument is subjected to controlled conditions where it measures predetermined values. For instance, a pressure gauge may be tested against a dead weight tester, which provides a precise pressure reading. Discrepancies between the instrument’s readings and the standard values are meticulously documented.

The next step involves the adjustment of the instrument. If discrepancies are noted, technicians will often employ calibration tools and techniques to realign the instrument’s scale. This adjustment may be as simple as turning a calibration screw or as complex as reprogramming the internal software of digital devices. In some cases, additional components such as resistors or capacitors may be added or replaced to correct any inaccuracies. A noteworthy aspect of this phase is that some instruments allow for ‘self-calibration,’ utilizing built-in routines to automatically adjust based on feedback from standardized measurements.

Once adjustments are made, the calibration is reiterated—results are usually logged, and a calibration certificate is generated detailing the condition of the instrument before and after calibration. This documentation not only serves as a record for quality assurance but is also often required for regulatory compliance in various industries such as pharmaceuticals and aviation.

While these steps outline the basic procedure of calibration, the task is often fraught with challenges. One notable challenge is environmental variability, which can greatly influence measurement outcomes. For instance, a temperature sensor can exhibit different readings based on ambient temperature or pressure conditions. Thus, calibration must account for such variables by conducting tests under specified environmental controls to maintain consistency.

Another challenge lies with the instruments themselves, particularly older or more elaborate devices, which may be subject to obsolescence or lack of available standards. As technology evolves, some measuring instruments become less compatible with existing calibration standards, necessitating the development of new methodologies or retaining specialized services that can still address these legacy systems.

Furthermore, human factors also play a significant role in the calibration process. The personnel conducting these calibrations must have expert knowledge and training to ensure the accuracy and integrity of their work. Lack of proper training can lead to critical errors, which may result in costly implications or even hazardous situations, particularly in fields such as medicine or aerospace, where precision is non-negotiable.

A significant consideration in the calibration process is the choice of a standard. Calibration standards are often traceable to national or international standards, such as those provided by the National Institute of Standards and Technology (NIST) in the United States or the International Organization for Standardization (ISO) globally. The traceability of these standards ensures that measurements can be compared across different regions and applications, contributing to a universal understanding of measurement accuracy.

The implications of effective calibration are vast. Accurate measurements impact not only research and development but also product quality and safety. For instance, in the pharmaceutical industry, drug formulations require precise measurements to ensure efficacy and safety. Any deviation stemming from improper calibration can lead to detrimental outcomes, including adverse health effects or product recalls.

Conversely, a well-calibrated system fosters confidence in the data produced, thereby enhancing productivity and reducing waste. In industrial applications, where precision machinery operates based on calibrated instruments, the entire manufacturing process hinges on the integrity of measurements. Miscalibrated instruments can lead to defects, causing financial loss and compromising safety standards.

In conclusion, the process of calibrating measuring instruments is an intricate blend of science, skill, and stringent procedural discipline. As we navigate this ever-evolving landscape, emphasis must be placed on both the technology and human expertise behind calibration. A systems-based approach that embraces rigorous standards, comprehensive training, and adaptive methodologies serves not only to preserve the accuracy of our measurements but also to uphold the very foundation of empirical research and industrial operations. Ultimately, the question beckons: in an age of increasing automation, how will we reconcile the complexities of calibration with the necessity for precision in an array of applications? The answer may determine the future trajectory of measuring accuracy across all fields.