Classical computing, a cornerstone of modern technology, is the framework upon which the vast majority of computational processes rest. It represents an intricate interplay of hardware and software that manipulates binary data through well-defined algorithms, propelling the digital age into its current complexity. Despite its ubiquity and fundamental nature, a detailed exploration into the nuances of classical computing reveals layers of sophistication, inviting an appreciation that transcends mere functionality.

At the heart of classical computing lies the binary digit, or bit, which serves as the fundamental unit of information. Each bit can exist in one of two states: zero or one. This binary foundation allows for the representation of a multitude of data types, including numbers, characters, and images, forming the basis for digital communication. The manipulation of these bits is executed through logical operations, primarily governed by Boolean algebra, which utilizes a series of operations—including AND, OR, and NOT—to perform computations.

The architecture of classical computers can be categorized into several pivotal components: the central processing unit (CPU), memory, storage, and input/output systems. The CPU, often regarded as the brain of the computer, executes instructions from programs by performing calculations and managing data flow within the system. This efficiency is further enhanced by cache memory, an intermediary storage area that allows for quicker data access, reducing latency and dramatically improving performance.

Memory plays a critical role in the functioning of classical computers, as it temporarily holds data and instructions necessary for processing tasks. Random Access Memory (RAM) is utilized for this purpose, allowing for swift read and write access to data. However, the data held in RAM is volatile, disappearing when the system powers down. In contrast, non-volatile memory types, such as hard drives or solid-state drives, retain information even when not powered, serving as the long-term storage solution for data and applications.

Software, in conjunction with hardware, constitutes the operational backbone of classical computing. Operating systems, such as Windows, MacOS, or Linux, provide a user interface and manage hardware resources. Applications, ranging from word processors to complex data analysis programs, leverage the capabilities of both the OS and hardware to execute user-defined operations. The symbiotic relationship between hardware and software epitomizes the harmony necessary for effective computational processes.

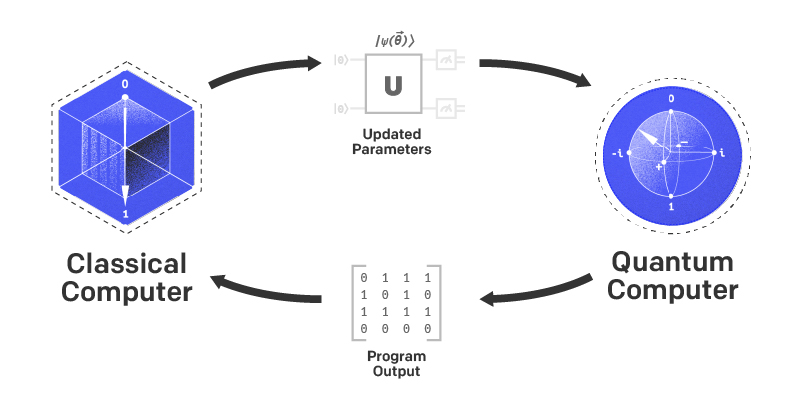

Classical computers function based on an architectural model popularly elucidated through the von Neumann architecture, wherein the CPU, memory, and input/output devices are interconnected. This model enables the computer to retrieve and execute instructions sequentially, creating a coherent and structured flow of operations. However, this sequential processing has inherent limitations, especially when faced with large-scale problems requiring simultaneous calculations, which beckons the exploration of alternative computational paradigms.

Despite their remarkable capabilities, classical computers operate within the realms of linear time and polynomial complexity, as characterized by the limitations of algorithms based on conventional logic gates. This characteristic gives rise to the so-called “P vs NP” problem, a fundamental question within computer science that probes the boundaries of computational efficiency. While numerous problems can be solved quickly (in polynomial time), others, particularly those classified as NP (nondeterministic polynomial time), remain challenging due to the exponential time complexity associated with their solutions.

Throughout the history of computing, the trajectory has been fundamentally driven by the quest for enhancing speed, efficiency, and the capacity to solve more complex problems. Technological advancements, such as the transition from vacuum tubes to transistors, and subsequently to integrated circuits, have exponentially increased processing power. This evolution has facilitated the miniaturization of components, illustrated vividly in the transition from large mainframe systems to pocket-sized smartphones, making computing power accessible to the masses.

The fascination with classical computing, however, extends beyond mere performance enhancements or convenience. It encapsulates a profound engagement with the abstract principles of logic and mathematics that underpin its operation. The interplay of hardware and software fosters an environment where creativity and analytical skills merge, inviting individuals not only to consume technology but also to innovate within its expansive framework.

Moreover, the philosophical inquiries prompted by classical computing—such as the implications of artificial intelligence and machine learning—catalyze ongoing debates about the nature of consciousness, decision-making, and ethics in the digital realm. As classical computing continues to evolve, its profound influence on various domains, including medicine, finance, and education, underscores the pivotal role it plays in shaping contemporary society.

In conclusion, classical computing transcends its original inception rooted in binary logic and algorithmic processes. It represents a dynamic and multifaceted paradigm that continues to evolve, driven by the insatiable curiosity of humanity. Engaging deeply with the intricacies of classical computing not only fosters a genuine appreciation for its capabilities but also compels us to consider the broader ramifications of our increasingly digital existence. As we precipitate further into the age of information, the exploration of classical computing remains a crucial endeavor, urging us to ponder its future trajectory and its ethical implications on society at large.