In the age of transformative technological advancement, the emergence of quantum computing has spurred fervent debates about its conceptual status as a computer, particularly in relation to classical digital computers. Delving into this issue requires a nuanced understanding of both quantum mechanics and digital computation, as well as an appreciation for the implications of this revolutionary technology. The question, “Is a quantum computer still a digital computer?” invites inquiry into the very definitions and foundational principles that govern computing.

First, let us define what constitutes a traditional digital computer. Classical digital computers operate based on binary logic; they process information in discrete units known as bits, which can exist in one of two states: 0 or 1. This binary system underpins nearly all existing computational frameworks, allowing for operations such as addition, subtraction, and more complex algorithms through the manipulation of these bits. Consequently, digital computers are designed to perform tasks that adhere to deterministic logic, navigating through data following strict, algorithmic paths.

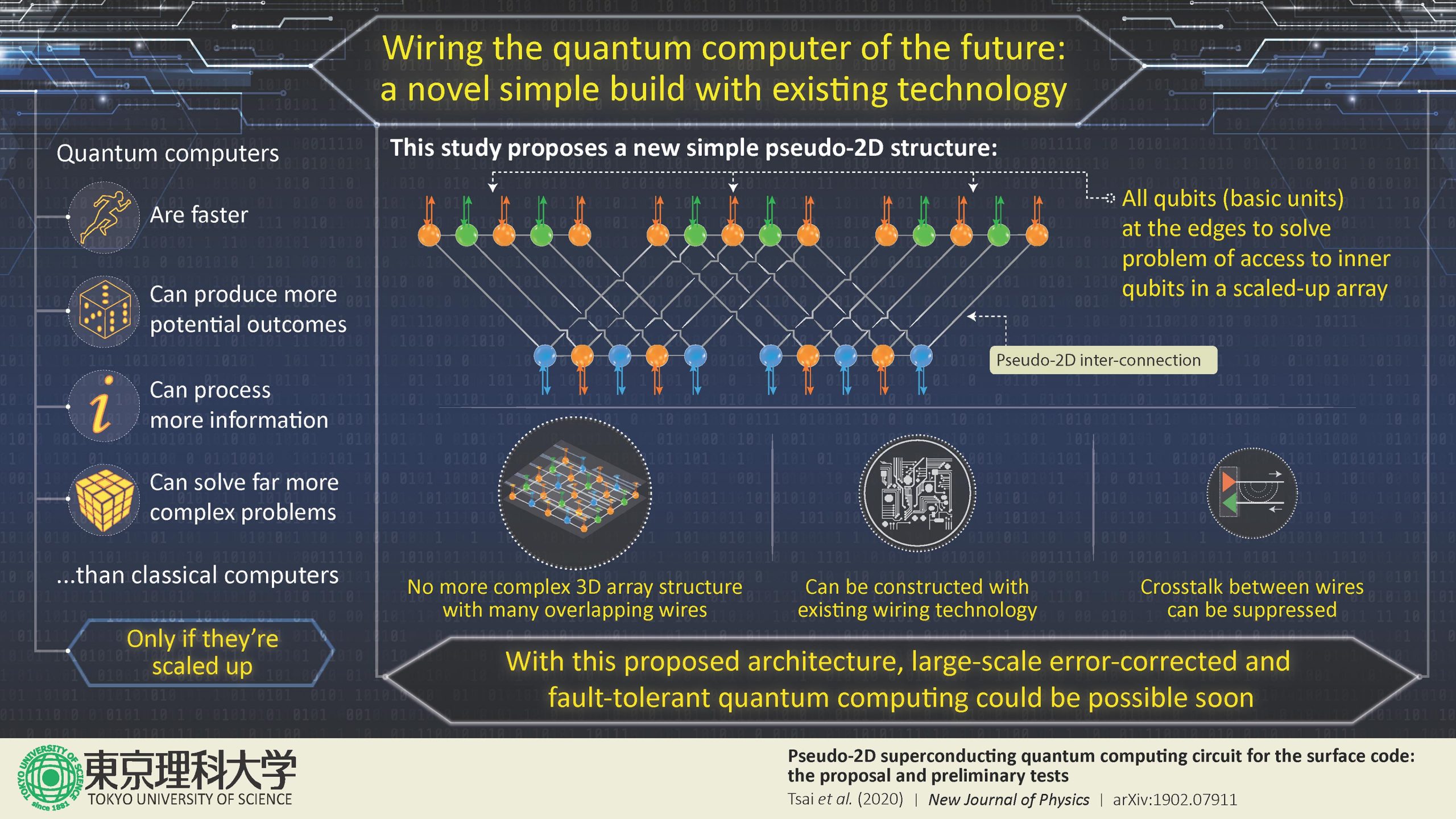

Conversely, quantum computers harness the principles of quantum mechanics, which govern the behavior of matter and energy at subatomic scales. At the core of quantum computing are qubits, the quantum analogues of classical bits. Unlike bits that can be either 0 or 1, qubits can exist simultaneously in multiple states thanks to a phenomenon known as superposition. This property enables quantum computers to process vast amounts of information in parallel, leading to exponential increases in computational power for specific problems.

To better appreciate the implications of this distinction, consider the implications of quantum entanglement, another fundamental aspect of quantum theory. When multiple qubits become entangled, the state of one qubit is intrinsically tied to the state of another, regardless of the physical distance separating them. This non-local characteristic introduces a set of possibilities and complexities that extend beyond simple binary logic, challenging traditional notions of information processing.

The convergence of these two paradigms raises a pivotal question: Can quantum computers indeed be classified as digital computers? The answer hinges on how we perceive “digital.” If we define digital computation strictly through binary representation and deterministic logic, then the inherent characteristics of quantum computers challenge this categorization. Their reliance on superposition and entanglement implies a fundamentally different architecture of information processing.

Yet, the crux of the matter lies not merely in these distinctions but in the potential for quantum computing to alter the very fabric of computation. Classical digital computers are bound by the limits of Moore’s Law, which states that the number of transistors on a microchip doubles approximately every two years, leading to ever-increasing computational power. Conversely, quantum computing offers a paradigm shift that could potentially bypass these limits altogether. Certain calculations that would take traditional computers millennia to compute could, in theory, be executed in mere seconds on a quantum machine.

Consider the realm of cryptography; quantum computers promise the ability to crack classical encryption schemes through algorithms such as Shor’s algorithm, which employs quantum principles to factor large integers exponentially faster than its classical counterparts, thus threatening data security protocols. This disruptive potential epitomizes a shift in the computational landscape and posits profound implications for privacy, security, and data integrity.

While quantum computers exhibit superior capabilities for specific applications, they currently coexist with classical computers in a spectrum of computational power. The reality is that present-day quantum computers are in the nascent stages of development, often supplemented by classical systems to harness their unique benefits effectively. Hybrid models are emerging, where quantum processes are integrated with traditional computing techniques, forging a symbiotic relationship between the two paradigms.

Moreover, the realm of applications is not confined to cryptography. Quantum computing holds promise in fields such as material science, drug discovery, and optimization problems—domains where the immense processing capabilities can elucidate complex systems that traditional computers struggle to analyze. The implications extend far beyond mere computational power; they offer new ways of understanding and modeling intricate phenomena in nature.

Nevertheless, the transition to quantum computing is rife with challenges. Issues related to qubit coherence, error rates, and scalability must be addressed before large-scale quantum computation becomes feasible. Rigorous research is underway to develop error-correcting codes and fault-tolerant architectures that could mitigate these obstacles, paving the way for practical implementations of quantum technology.

In conclusion, while one might assert that quantum computers remain within the wider spectrum of computation, categorizing them as digital computers requires a reevaluation of the foundational principles of computing itself. Quantum computing promises to not only enhance computational capacities but also redefine our understanding of information processing, compelling us to confront the limitations of classical paradigms. As we stand at the precipice of this quantum revolution, the dialogue surrounding the classification of quantum computers elucidates the broader implications of technological progress and the continual evolution of the computing landscape. The inquiry into whether quantum computers are indeed digital invites us to rethink not just what a computer is but also what it might yet become.