In the emerging field of quantum computing, the concept of boson sampling stands as a beacon of potential, illuminating pathways seemingly inaccessible to classical computing paradigms. Drawing an analogy, one might envision boson sampling as a river navigating through a dense forest—a route fraught with complexity, yet uniquely suited for traversing challenges that would stump a conventional vessel. This novel quantum process rests on the principles of non-classical light and the behavior of photons, revealing profound implications for computation and theoretical modeling.

At its core, boson sampling exploits the indistinguishable nature of identical particles, particularly bosons, like photons. In quantum mechanics, bosons are particles that follow Bose-Einstein statistics, allowing multiple particles to occupy the same quantum state. This stands in striking contrast to fermions, like electrons, which obey the Pauli exclusion principle. Thus, boson sampling utilizes an array of indistinguishable photons to perform computations that classically require an insurmountable degree of complexity and resources.

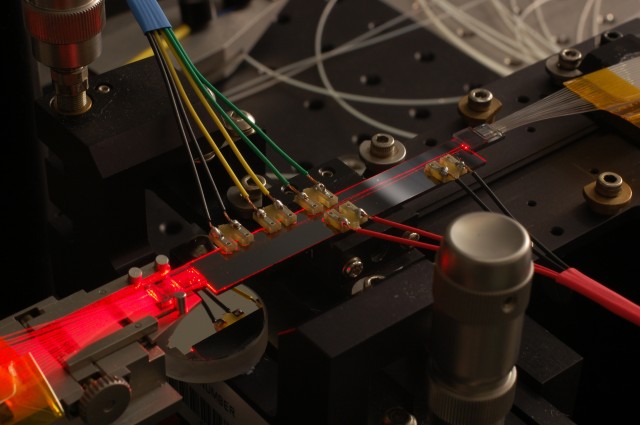

The computation begins with the preparation of multiple indistinguishable photons emitted simultaneously through a linear optical network—an intricate array of beam splitters and phase shifters. As these photons traverse the network, they undergo transformations akin to a ballet, where their routes intertwine and interfere, creating a rich tapestry of quantum states. The outcome of this intricate dance is a final probability distribution that characterizes the likelihood of various configurations in which the photons may emerge. Capturing these distributions is inherently difficult for classical systems, creating what is known as the “hardness of the sampling problem.”

One of the most striking features of boson sampling is its ability to perform specific tasks faster than any classical computer could ever hope to achieve. The exponential scaling of classical computation resources required to simulate boson sampling grows astronomically as the number of photons increases. This inefficiency is pivotal; it embodies the essence of quantum advantage—the phenomenon where quantum systems solve problems faster than even the most powerful classical systems.

However, presenting a classical comparison elucidates how boson sampling may offer a shortcut through the computational forest. Consider the classic problem of evaluating the permanent of a matrix. For a matrix of size n, calculating its permanent has a complexity of (O(n!)), a computational burden that escalates rapidly. In some cases, boson sampling has the fortuitous ability to generate the required permanent representation via innate quantum properties, creating a realm where linear optics can outperform traditional factorial-based calculations.

To lay bare the operational mechanics of boson sampling, one must appreciate the interplay of quantum interference and the homodyne detection of photons. As photons traverse the optical components, they exhibit interference patterns incapable of being replicated by classical algorithms. Each photon’s probability distribution is governed by its path through the beamsplitters, with potential pathways yielding spectral configurations that are quantum mechanically entangled. The superposition of possibilities culminates in an incident measurement that collapses these states into a definitive distribution, revealing insights that classical computations would consume excessive time and resources to approximate.

Yet, the allure of boson sampling is not merely in its capability to compute faster. It heralds an era of practical quantum applications in fields ranging from cryptography to complex system modeling. For instance, as researchers puzzle over quantum chemistry’s molecular interactions or predicted outcomes in high-energy physics, the bespoke advantages of boson sampling become uncontestably apparent. The potential to simulate quantum systems or derive statistical properties with unprecedented efficiency directly alters the landscape of scientific inquiry.

One must also address the challenges that accompany the implementation of boson sampling. Despite its theoretical advantages, practical execution entangles numerous hurdles. Photonic sources are prone to imperfections, such as loss and noise, which can obscure the clarity of experimental results. Additionally, scalability poses another significant challenge. Current implementations have successfully demonstrated boson sampling with a limited number of photons, but expanding these systems to harness greater computational power remains a tantalizing challenge, akin to scaling a mountain amid unpredictable weather.

Research is ongoing, exploring various avenues of improvement—from more robust sources of indistinguishable photons to refined designs of linear optical networks. Furthermore, hybrid approaches that incorporate classical and quantum methods may yield practical frameworks that leverage the strengths of both types of computing, thus enhancing the functional richness of boson sampling applications.

In conclusion, the quest for universal quantum computation may still reside on the horizon, yet boson sampling stands as a testament to the potential of quantum mechanics to forge pathways through computationally intricate territories. With a unique appeal rooted in the intriguing nature of indistinguishable particles, boson sampling serves not only as a tool but as a reflection of fundamental physical principles and their capacity to transform traditional computation. As researchers continue to navigate the quantum landscape, the promise of boson sampling instills optimism—an emblem of potential that challenges our understanding of performance and opens the door to a captivating new age of computation.