In the realm of scientific inquiry and experimental design, the precision of measurements plays a pivotal role in deriving meaningful conclusions. Often hidden, yet influential, is the concept of reading error in measurements. One might wonder: how does a simple act of reading scale or dial translate into significant consequences in results? This inquiry not only challenges our perception of accuracy but also reflects on the subtleties of human interaction with measurement tools. In this discourse, we delve deeply into the multifaceted dimensions of reading error, its implications, and the methodologies to mitigate it.

At its core, a reading error refers to the discrepancy between the true value of a quantity and the value indicated by a measuring instrument. This error can manifest in various forms, influenced by numerous factors, including the limitations inherent in the measuring device itself, the environmental conditions, and the interpretive actions of the observer. The quest for accuracy compels practitioners across disciplines to navigate the intricate web of potential inaccuracies that can ensue.

Reading errors can be broadly categorized into systematic errors and random errors, both of which merit examination. Systematic errors are consistent and reproducible inaccuracies often attributable to a flaw in the measurement system, such as a miscalibrated instrument. For instance, if a scale consistently reads two grams heavier than the actual weight, every measurement taken with it will reflect this bias, leading researchers astray. In contrast, random errors arise from unpredictable fluctuations, which might stem from environmental variabilities—such as temperature or humidity—that influence the measurement process unexpectedly. Such errors highlight the inherent variability in many experimental contexts.

Moreover, one must consider the human factor: the observer’s ability to read measurements accurately. This element introduces a layer of complexity, as it encompasses visual perception constraints and the aptness of the user in interpreting instrument readings. For example, consider a thermometer with a non-linear scale; a novice might misinterpret the decimal values amid the gradations, leading to erroneous conclusions. Thus, the critical question emerges: how can one mitigate visual reading errors when engaging with complex instruments?

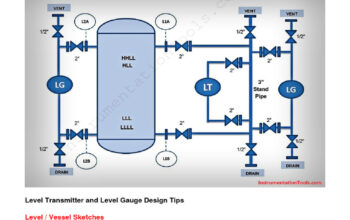

Several strategies can be employed to reduce reading errors. The first involves proper instrument calibration. A well-calibrated instrument enhances reliability, ensuring that measurements are as close to the true value as possible. Regular maintenance and adherence to prescribed calibration protocols foster accuracy. Beyond calibration, improving observational practices can further diminish reading error. For further assurance, employing digital instruments over analog counterparts can yield higher precision; digital displays often minimize human interpretation errors through unambiguous numerical presentations.

In conjunction with improved instruments, deploying multiple observers can serve as a robust mechanism against reading errors. When distinct operators independently measure the same quantity, variations in individual readings can be averaged, subsequently reducing the likelihood of systematic errors influencing the overall consensus. This collective approach is particularly beneficial in collaborative research environments where accuracy is paramount.

It is also prudent to train personnel. Familiarity with the instruments, an understanding of their limitations, and recognition of conditions affecting measurements can foster a culture of vigilance among observers. Adequate training programs that emphasize the psychology of measurement and critical thinking skills can ameliorate many instances of reading error. Furthermore, implementing redundancy—the use of multiple measurement techniques to capture the same data—can serve as an effective hedge against any single method’s shortcomings.

Yet, even with all these considerations, one cannot entirely eliminate reading errors. The pertinence of understanding the extent and impact of these errors on the overall measurement process cannot be overstated. Various statistical models exist that can quantify the influence of reading errors on data integrity. For example, employing error propagation techniques allows researchers to estimate how uncertainties in individual measurements culminate in uncertainties in the final results. This quantitative demystification aids in assessing the reliability of experimental outcomes.

To appreciate the impact of reading errors ultimately challenges one to consider the nature of scientific inquiry itself. It prompts a reflection on the delicate interplay between human objectivity and instrument fallibility. Scientific rigor demands not solely reliance on instruments but also an acute awareness of the subjective lens through which measurements are processed. In cultivating this awareness, the scientific community nurtures a tenacious spirit of inquiry, perpetually striving for precision while acknowledging the limitations that invariably accompany the quest for knowledge.

In conclusion, reading error in measurements embodies a complex interaction among various elements that can compromise the integrity of scientific findings. Recognizing its origins, manifestation, and potential remedies can enhance the reliability of data collection methodologies. The pursuit of accuracy necessitates vigilance, a commitment to methodical practice, and ongoing education within the scientific discourse. As researchers and practitioners navigate the labyrinth of measurement error, they embrace not only the numerical precision but the philosophical challenges it embodies, thereby refining the very essence of scientific interpretation and understanding.