Understanding accuracy in instrumentation is of paramount importance across various scientific disciplines, from physics to engineering and beyond. This explorative analysis delineates the nuances of accuracy, providing insights into its role, significance, and the various factors influencing it in the realm of measurement and instrumentation.

At its core, accuracy refers to the degree to which a measured value aligns with the true or accepted value. It is distinct from precision, which relates to the repeatability or consistency of measurements irrespective of their closeness to the true value. This differentiation is fundamental for professionals engaged in fields requiring meticulous measurements, such as metrology, calibrations, and quality control.

In discussing accuracy, we must first consider the two broad categories: systematic errors and random errors. Systematic errors arise from identifiable sources, often leading to a consistent bias in measurements. These errors can stem from calibration issues, environmental influences, or instrument design flaws. For instance, a thermometer that is not calibrated correctly will consistently yield results that deviate from the actual temperature, thereby compromising accuracy.

Conversely, random errors are inherently stochastic and may occur due to unpredictable changes in conditions or human factors. These fluctuations can be reduced through repeated measurements and statistical analyses, providing a more accurate representation of the true value when averaged out. Understanding the interplay between these types of errors is crucial for both the theoretical and practical applications of instrumentation.

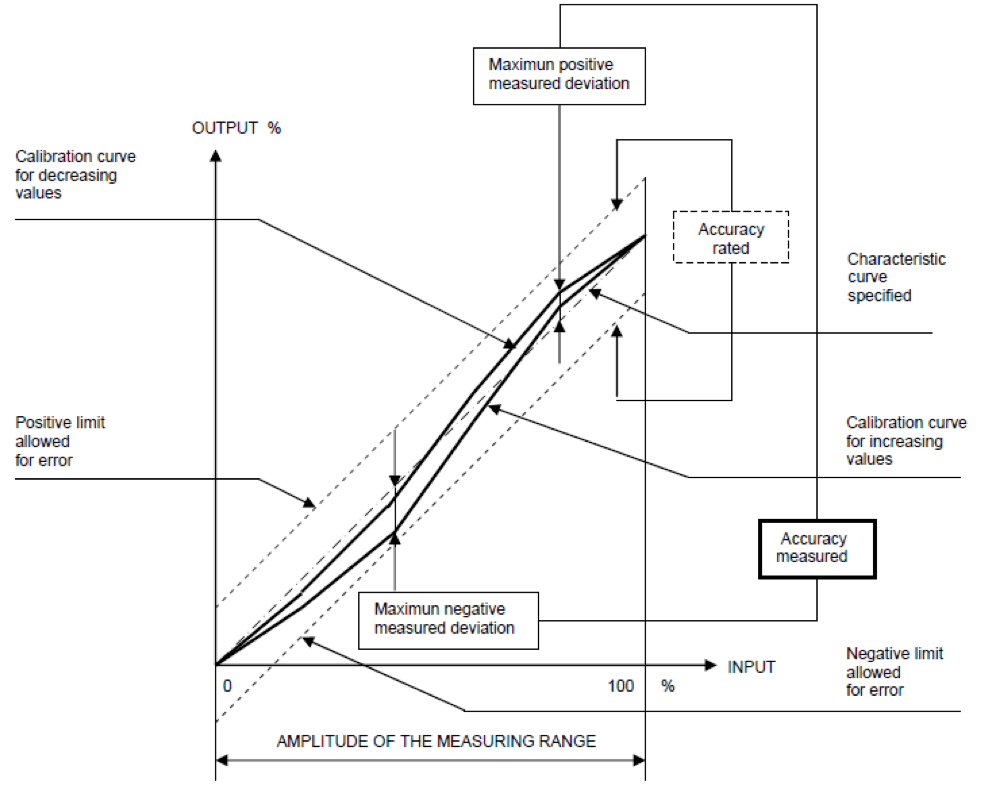

Furthermore, the accuracy of an instrument is often quantified using metrics such as the accuracy percentage, which reflects the deviation of a measured value from a known standard. This is typically expressed using the formula:

Accuracy (%) = (1 – |Measured Value – True Value| / True Value) x 100%

This quantitative assessment of accuracy is indispensable for determining measurement validity and reliability. It allows scientists and engineers to gauge the performance of their instruments critically, fostering a culture of quality and precision in research and industry.

It is also essential to discuss the factors that influence accuracy in instrumentation. Instrument design, for instance, plays a critical role. The inherent characteristics of the measurement device, including its resolution and range, directly impact its accuracy. Each instrument has a specific operational range beyond which its accuracy diminishes, necessitating a comprehensive understanding of the specifications. Additionally, the choice of measurement technique can also affect accuracy; for example, an analog device may offer varying accuracy based on user interpretation compared to a digital counterpart that eliminates such ambiguity.

Environmental factors constitute another crucial element influencing accuracy. Temperature, humidity, and atmospheric pressure can significantly alter the performance of sensitive instruments. Calibration of instruments must take these variables into account; otherwise, the accuracy of measurements can be compromised. For example, electronic devices might experience drift in performance under extreme temperature variations, leading to erroneous readings.

The calibration process itself must be articulated as a significant determinant of accuracy. Calibration involves comparing the output of an instrument against a known reference standard and making necessary adjustments to ensure optimal performance. Regular calibration is imperative, as it accounts for wear and tear, and environmental inconsistencies, which could lead to drifts in accuracy over time. A failure to calibrate appropriately can result in substantial errors that not only mislead experiments but can also have dire implications in industries such as pharmaceuticals, where precision is non-negotiable.

Moreover, user proficiency cannot be overlooked. The skill and training of the personnel operating the instrumentation significantly affect accuracy. Proper operational practices, including adherence to standardized procedures for measurement and handling, play a vital role in ensuring that the data obtained is accurate. Lack of knowledge about equipment limitations or incorrect usage techniques can introduce errors that might be misconstrued as inherent inaccuracies of the instrument itself.

Additionally, advancements in technology have ushered in an era of enhanced accuracy through innovative instrumentation. Digital sensors and automated data acquisition systems minimize human errors and enhance measurement precision and reliability. Technologies such as feedback control systems can improve response times and accuracy, allowing for real-time adjustments based on fluctuating environmental conditions.

As we traverse through various fields of application, accuracy assumes different levels of significance. In research environments, it is essential for the validation of hypotheses and the reproducibility of results. In manufacturing, accuracy may directly impact product quality and compliance with regulatory standards. In the realm of environmental monitoring, accurate data is crucial for assessing ecological effects and implementing effective solutions.

In conclusion, the concept of accuracy in instrumentation is multifaceted, encompassing various dimensions including types of errors, influencing factors, and application significance. The pursuit of accuracy is fundamental in sustaining the integrity of experimental and practical outcomes across disciplines, positioning it as a cornerstone of reliable science and technology. By fostering a thorough understanding of accuracy, individuals and organizations can enhance their operational standards, driving advancement in measurement science and applied technology.