Entropy, a pivotal concept within thermodynamics, delineates the degree of disorder or randomness in a system. This measure has profound implications not just in physics but also in various other domains, including information theory, statistical mechanics, and cosmology. The entropy bound, in particular, establishes fundamental limits on how information and disorder can manifest in physical systems. Understanding the entropy bound and its interplay with disorder leads to a deeper comprehension of both macroscopic and microscopic phenomena in the universe.

The concept of entropy originated with the work of Rudolf Clausius in the 19th century, who articulated the Second Law of Thermodynamics. This law postulates that in an isolated system, the total entropy can never decrease over time; it may, however, remain constant in ideal reversible processes. This inevitability signifies that natural processes tend to evolve toward a state of maximum entropy, or equilibrium, wherein the system exhibits a uniform distribution of energy. The implications of this law stretch far beyond classical thermodynamics and reach into the realms of statistical mechanics.

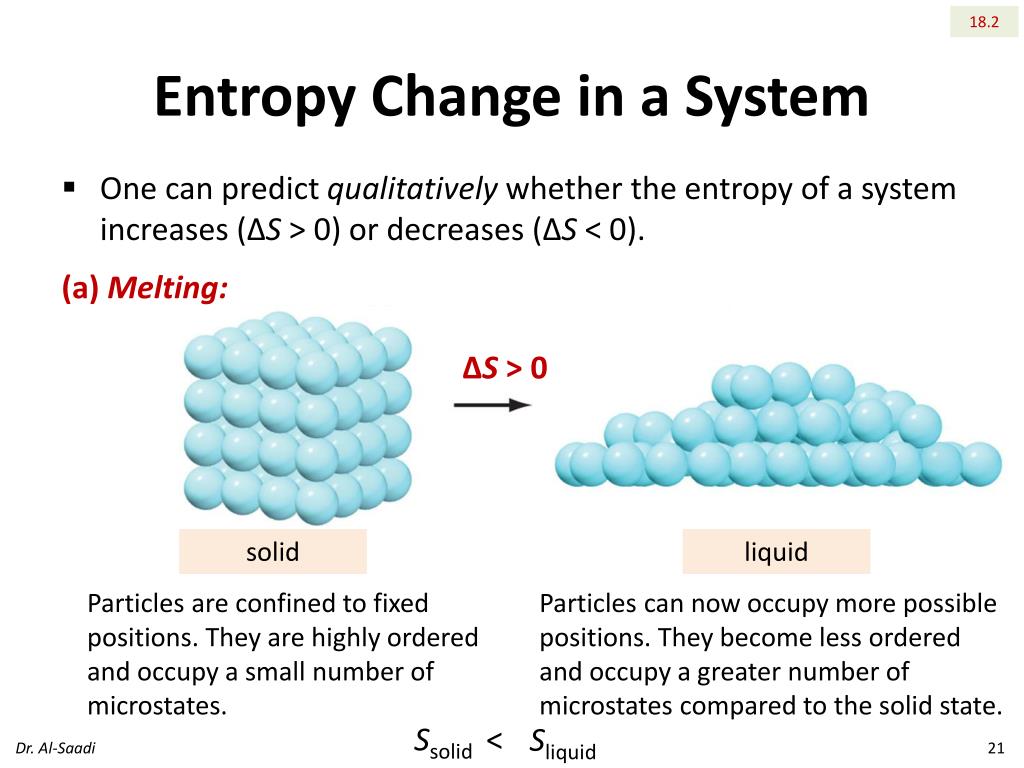

Statistical mechanics, pioneered by Ludwig Boltzmann, provides a microscopic perspective on entropy. Boltzmann formulated the statistical definition of entropy, S = k log(Ω), where S represents entropy, k is the Boltzmann constant, and Ω denotes the number of accessible microstates consistent with the macroscopic conditions. This equation reveals that the more microstates available to a system, the higher its entropy. Thus, in essence, disorder can be quantitatively assessed through the lens of microstate configurations.

One intriguing aspect of entropy is its connection to information. In information theory, entropy quantifies the uncertainty associated with a random variable. This overlap between physics and information theory has prompted discussions around the “information paradox,” particularly in contexts involving black holes. According to conventional wisdom, information that falls into a black hole becomes irretrievably lost, thereby violating the principles of quantum mechanics and the conservation of information. However, recent advancements in theoretical physics have suggested that the entropy of a black hole is directly proportional to the area of its event horizon, leading to the formulation of the Bekenstein-Hawking entropy formula. This realization has yielded new insights into the nature of disorder in extreme gravitational environments.

The entropy bound posits that the total entropy of a physical system cannot exceed a certain limit, determined by the properties of the enclosing boundary. Specifically, the Bekenstein limit provides a quantitative description of this bound, indicating that the entropy associated with a given volume of space is contingent upon the energy residing within that volume and the area of its boundary. This assertion implies that an increase in the energy content of the system—while upholding the energy constraint—results in a proportional increase in entropy, thereby reaffirming the link between energy, entropy, and disorder.

Beyond black holes, the entropy bound hypothesis applies broadly throughout the cosmos. For example, in the context of cosmology, the early universe was characterized by an extremely low entropy state. As the universe expanded and evolved, entropy increased, progressing towards states of higher disorder. This evolution not only elucidates the trajectory of cosmological phenomena but also accentuates the inevitability of disorder in the universe’s future, potentially culminating in a scenario often referred to as “heat death.”

Furthermore, the concept of entropy has extended its reach into complex systems and emergent phenomena, encompassing ecological systems, social interactions, and economic behavior. In these complex systems, entropy can serve as a measure of diversity, disorder, and information flow. As systems evolve, intricate interactions and feedback mechanisms give rise to novel structures and patterns; nevertheless, the overarching principle persists that entropy tends toward maximization, often at the expense of subsystems striving for order.

Entropic principles also manifest in the phenomenon of self-organization, providing grants the potential for localized decreases in entropy within particular systems. This paradox allows for the emergence of ordered structures, such as biological organisms or crystal lattices, amid the broader second law framework asserting a net increase in total entropy. In this context, local entropy decreases can arise through energetic inputs or information flow from external sources, emphasizing the delicate balance between order and disorder in dynamic systems.

The exploration of entropy bound and disorder invites stimulating philosophical questions regarding determinism and the nature of reality. If entropy ultimately drives evolution toward disorder, can any system aspire to intrinsic order, or is chaos an inevitable fate? These inquiries resonate with foundational questions in science and metaphysics, illuminating the intricate relationship between entropy, information, and existence itself.

In summary, the interplay of entropy bound and disorder transcends mere academic exercise; it serves as a conceptual linchpin elucidating the behavior of systems across the spectrum, from the microscopic to the cosmic. By delving into the nuances of order, disorder, and the foundational limits imposed by entropy, we appreciate not only the underlying physical laws governing our universe but also the philosophical ramifications entwined therein. The journey to unravel these truths continues to shape our understanding of the cosmos and challenges our fundamental perceptions of existence and reality.