Instrumental error in measurement devices is an intriguing phenomenon that often perplexes scientists and students alike. Have you ever pondered why two precise measuring devices can yield slightly different results when assessing the same object? This paradox can often be attributed to the concept of “least count.” Understanding how to calculate instrumental errors utilizing this principle is essential for anyone engaging in experimental physics or engineering disciplines. In this discourse, we delve into the intricacies of least count and its relationship with instrumental error, providing insight into how these concepts interact.

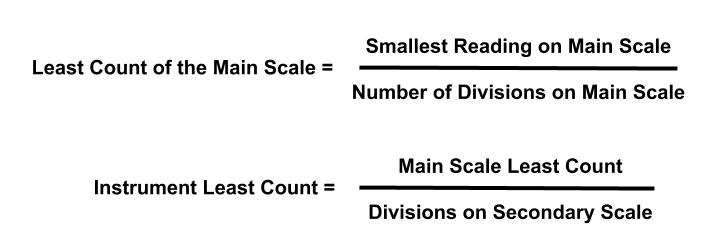

To commence, let us define “least count.” The least count of an instrument is the smallest increment of measurement that it can accurately read. For instance, a ruler with millimeter markings has a least count of 1 mm, while a digital caliper may have a least count of 0.01 mm. The significance of the least count cannot be overstated, as it fundamentally dictates the precision with which measurements can be made. But what happens when measurements stretch beyond this innate limitation? This is where the insights into instrumental errors bear fruit.

Instrumental error, fundamentally, is the discrepancy between the measured value and the true value of the quantity being assessed. Such errors arise from various sources: instrument calibration, environmental influences, and even the observer’s interpretation of the measurement. Understanding the instrumental error becomes crucial, particularly in laboratory settings where precision is paramount. As we delve deeper, we will explore the correlation between least count and instrumental error.

Now, let us pose a challenge to our understanding. Imagine you are tasked with measuring the length of a metal rod using a meter stick with a least count of 1 cm. Upon repeated measurements, you find that your readings fluctuate slightly, yielding variances like 30 cm, 30.5 cm, 31 cm, and 31.5 cm. Which values are likely reflective of the true length, and how do you ascertain the associated instrumental errors? This scenario urges a comprehensive exploration of measurement techniques.

The first step in calculating instrumental error involves obtaining multiple readings of the same measurement. The mean of these readings can provide a more reliable representation of the true length. To calculate this mean, simply sum the measurements and divide by the number of readings. For instance, using the readings from earlier — 30 cm, 30.5 cm, 31 cm, and 31.5 cm — the mean would be calculated as follows:

Mean = (30 + 30.5 + 31 + 31.5) / 4 = 30.75 cm

With the mean established, we can now focus on the instrumental error. To quantify the error, one must first determine the deviation of each individual measurement from the mean. This is achieved by subtracting the mean from each reading. Continuing with our earlier example, the deviations would be calculated as:

- 30 – 30.75 = -0.75 cm

- 30.5 – 30.75 = -0.25 cm

- 31 – 30.75 = 0.25 cm

- 31.5 – 30.75 = 0.75 cm

Next, we must calculate the absolute instrumental error. This is typically determined by the maximum deviation from the mean, allowing for a clear understanding of the largest error during measurement. In our case, the maximum deviation is ±0.75 cm. However, to fully appreciate the role of least count, we must also consider that any measured value can only be as precise as the least count allows. If the least count of our measuring tool is 1 cm, then our final precision limit should align with that stipulation.

By amalgamating the largest deviation and the least count, we can express the total instrumental error. Thus, the instrumental error in our measurements can be succinctly noted as:

Instrumental Error = Maximum Absolute Deviation ± Least Count

Incorporating this into our example yields:

Instrumental Error = ±0.75 cm ± 1 cm

Therefore, our final result posits that the potential error around the mean length measurement of 30.75 cm can range from 29.75 cm to 31.75 cm. This analysis elucidates how the combined effect of both the least count and the maximum deviation yields a comprehensive insight into the reliability of our measurement.

Importantly, while least count establishes a measuring tool’s limitation, it also guides us in determining the reliability of our experimental conclusions. When making pivotal choices based on measurements, it is crucial not just to acknowledge the measurement values but also to appreciate their inherent uncertainties. Specifically within fields reliant on precise measurements — physics, engineering, and environmental sciences, to name a few — such understanding is integral for analysis and interpretation of data.

In conclusion, calculating instrumental error through the framework of least count equips researchers with a robust methodology for enhancing the integrity of their measurements. By systematically approaching the evaluation of readings and acknowledging the interplay of factors that influence accuracy, one can navigate through the intricacies of scientific measurement with confidence. Thus, whether one is conducting a laboratory experiment or participating in field research, a thorough comprehension of least count and instrumental error remains indispensable for the pursuit of scientific truth.